New Leak Uncovers AI Assisted Mass Censorship on Facebook

Are governments weaponizing AI to reshape public discourse and whitewash their crimes?

Whistleblowers at Meta’s Integrity Organization have shared data with International Corruption Watch (ICW), revealing evidence of a mass censorship strategy abusing Meta’s reporting system.

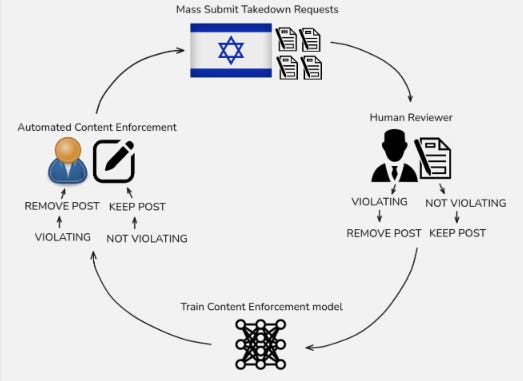

Normal Takedown Process

- User Reports—Any Facebook user can flag a post.

- AI Screening—The post is first checked by a content enforcement AI model that reviews the text and associated media. If the model is confident, it will remove the post.

- Human Review—If the model is not confident, the report will be escalated to a human reviewer.

- Training Loop—If a human approves the takedown, the post is labeled as a piece of training data and fed back to the AI’s training dataset, allowing for the model to adapt in real-time.

Meta’s public description of this pipeline is available at https://transparency.meta.com/enforcement/detecting-violations/how-enforcement-technology-works.

Prioritizing Government Requests

Governments and privileged entities have special access to submit takedown requests. These requests are given priority and are sent directly to human reviewers. They can be submitted by form or direct email to Meta depending on which country is involved.

These reports are handled faster and are more likely to be removed. Similarly, when approved by a human, it is given a label and added to the training dataset.

When large amounts of government-submitted requests are approved, the AI model receives a flood of labeled examples that it further trains itself on.

When abused, this is an example of a machine-learning attack called a “data-poisoning” attack. The model eventually becomes heavily biased towards removing content that matches the pattern of reports.

International Corruption Watch Investigation

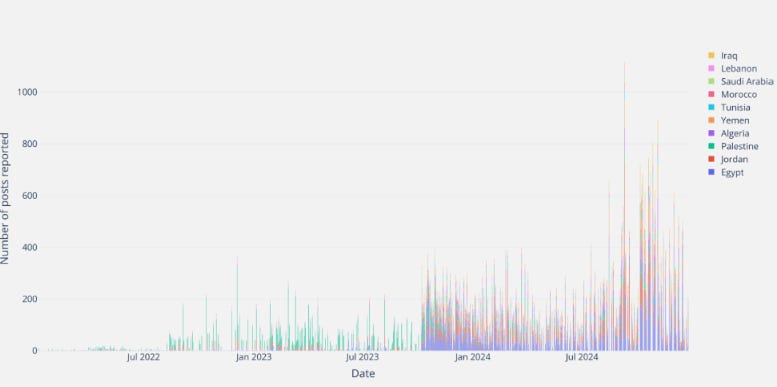

The ICW theorizes that governments like Israel use mass reporting during a crisis to shape public opinion. In this report, ICW aggregated data and then analyzed takedown requests from the Israeli government.

Prior to the October 7th attack, Israeli government reports averaged ~100 per day. After the attack, the daily volume skyrocketed to 150 to 400 reports per day.

What’s in a Report

Each report is associated with a source country where the post originated. Unlike other countries, the data shows Israel focused on posts originating in the surrounding countries, like Egypt, Jordan, Palestine, Algeria, and Yemen. These few neighboring countries made up a whopping 69% of their reports. Israeli reports of posts originating from the US contributed to only 0.7% of the total report volume.

The Timing

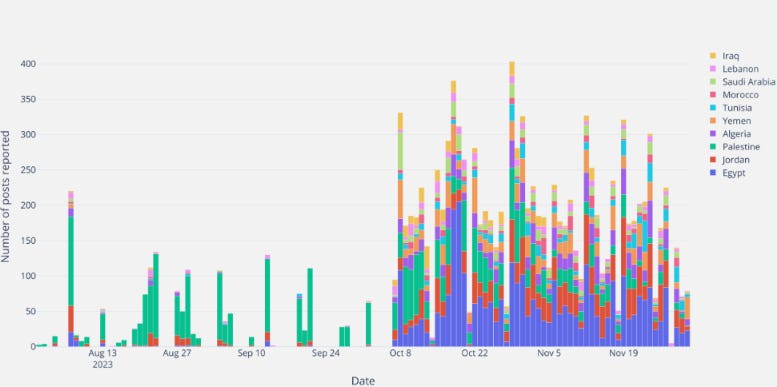

Zooming in, we can see how activity picked up after October 7th and shifted focus from Palestine to the surrounding region. Also of note is the drop in reports every seven days, coinciding with Shabbat.

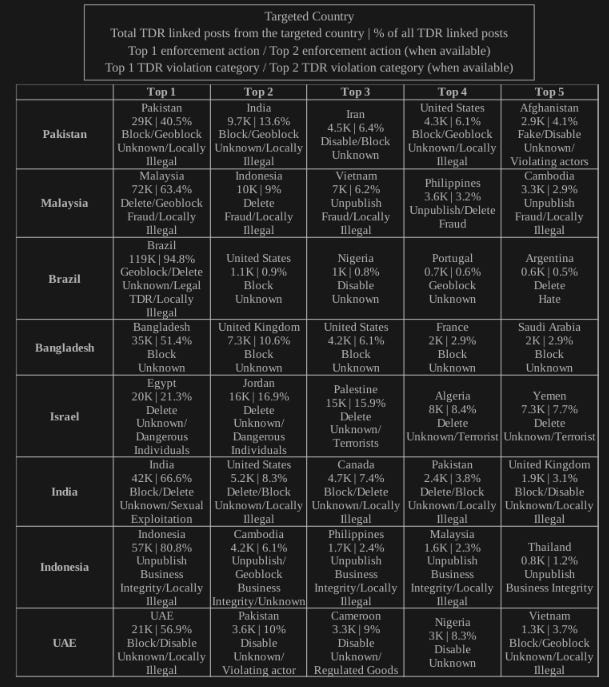

Although Israel has the most takedown requests per capita, there are other governments also sending large numbers of reports, including Pakistan, Malaysia, Brazil, Bangladesh, India, Indonesia, and the UAE.

Remarkably, these countries focus on removing posts within their own countries and for fairly different removal categories (“locally illegal”).

Here’s a graph from the report showing top reporting countries versus the nations they reported:

A Bit of Poison for Your Data

The ICW team examined the correlation between all reports automatically flagged as “terroristic” by Meta’s AI across every country. As you can see, a huge spike followed October 7th. This suggests model overfitting.

Meta’s AI became hypersensitive to any content featuring news footage or discussion of Palestine, leading to large-scale removal worldwide.

This is equivalent to content being censored across all countries at the same time.

Ctrl C You Later

According to the ICW report, the Israeli government submitted a single template for each takedown request. It contained:

- A description of the October 7th attack and an official Israeli account

- Citations of Israeli counter-terrorism laws

- Links to multiple reported posts

The report alleges identical requests were sent tens of thousands of times without any additional context or reasoning for each unique post.

The data indicates that Meta approved these reports 94% of the time, with an average 30-second human review. This approval rate was far more successful and quicker than other countries.

This suggests there’s an unusual bias towards Israeli-originating reports.

Scale of the Impact

ICW estimates that ~38.8M posts have been actioned by the Content Enforcement Model’s data poisoning. Posts were removed not because they violated policy but because the model was over-trained on mass reports from the government.

The ICW report may get more stock from an earlier Human Rights Watch analysis that showed Israel’s use of mass reporting to suppress critics by reporting posts as “spam.” Meta later acknowledged and issued an apology for the unintended censorship.

Conclusion

This indicates a new paradigm of AI-assisted censorship where a feedback loop is used:

Privileged, high-volume government reports —> Rapid human approval —> AI Retraining —> AI Tuned to Censor Similar Content

When the inputs to content AI are manipulated and accepted, the resulting model bias can silence legitimate discourse.

It would be extremely concerning if governments weaponize AI to reshape public discourse and whitewash their crimes.

Since this type of activity is not new and was acknowledged by Meta, it’s unlikely we will ever see fair moderation on any large platform. Alternative decentralized platforms are desperately needed.

What types of social media do you see becoming popular in the future?

Should social media be moderated by AI?

Would this issue have been lessened if human review did its job?

This is a segment from #TBOT Show Episode 10. Watch the full episode here!

Finally, a laptop that respects your privacy and your freedom of choice.

✅ Modern reliable hardware.

✅ A cutting-edge Linux OS that's actually easy to use.

✅ Access to more software than ever before.

❌ And best of all, no big tech tracking!

Take Back Our Tech Newsletter

Join the newsletter to receive the latest updates in your inbox.